- EU law establishes new AI legal framework based on risks

- Companies say compliance poses cost, complexity concerns

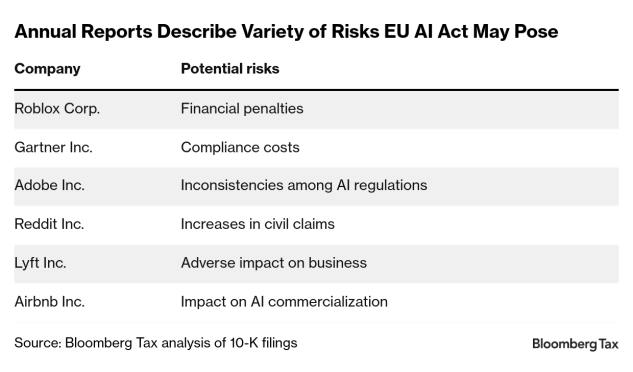

The European Union’s Artificial Intelligence Act—which creates obligations for providers, distributors, and manufacturers of AI systems—may require hefty compliance costs and force companies to alter their product offerings across the Atlantic, businesses say. In many cases, this marks the first time companies have disclosed concerns with the first-of-its kind EU law in their annual reports’ sections outlining potential risks to their operations and financial stability.

“It probably reflects the fact that there’s going to be potentially aggressive enforcement of the EU AI Act,” said Minesh Tanna, Simmons & Simmons LLP’s global AI lead.

The “risk factors” section of 10-K filings is intended to insulate companies from claims of securities fraud by flagging possible areas of concern for potential investors. Typical risks range from litigation costs to political and economic troubles in the countries in which companies operate.

The EU AI Act, which entered into force in August, is an addition to an existing list of technology and data privacy-related laws that businesses have named in their reports. Its first provisions blocking overly risky uses of AI in the EU took effect in February.

Ambiguity over what the law will require adds to corporate angst.

“It’s when you start looking at how the regulations are enforced that you get a real feel of how the adjudicators will decide on these issues,” said Elisa Botero, a partner at Curtis, Mallet-Prevost, Colt & Mosle LLP.

Diverse Concerns

The law’s risk-based rules aim to ensure AI systems in the EU are safe and respect fundamental rights, weeding out practices like AI-based deception. The regulation also establishes a legal framework for providers of general-purpose AI systems, such as OpenAI Inc.’s GPT-3 large language model.

Abiding by the law “may impose significant costs on our business,” consulting firm

These compliance costs can result from companies hiring new personnel, paying external advisers, or other operational costs, according to Jenner & Block London LLP special counsel Ronan O’Reilly.

Airbnb told investors regulations including the EU AI Act “may impact our ability to use, procure and commercialize” AI and machine learning tools in the future.

“We’re seeing—and we’re going to see more of this—the risk of fragmentation with what products and services are offered in different markets,” said Joe Jones, the director of research and insights for the International Association of Privacy Professionals.

The law could also require the use of AI to be altered or restricted in available products depending on the level of risk involved, the video game developer

EU officials have assigned more compliance requirements to higher-risk uses of AI, such as types of biometric identification. These obligations can include detailed documentation, human oversight, and data governance measures.

There’s ambiguity in the law’s “high risk” category of AI systems’ use, O’Reilly said.

Companies may have to look to a whole suite of separate EU legislation governing areas such as transportation to fully determine whether their AI systems fit into the “high risk” category, O’Reilly said. Those laws could have their own ambiguities, further complicating the picture.

The EU is working on supplemental guidelines to give companies a better sense of what their assessments should look like, said Gaia Morelli, counsel at Curtis.

For example, the European Commission published further guidelines on prohibited AI practices under the law in February.

Uncertain Fallout

Enforcement of the EU AI law is complicated because of the multiple players involved.

While the European AI Office within the European Commission has the sole competence to regulate general-purpose AI systems under the law, it will generally be up to the 27 member states’ officials to enforce the rules regarding high-risk uses of AI, said Tanna, of Simmons & Simmons.

“You could be facing action in multiple member states in respect to the same alleged issue,” Tanna said.

Breaching the act’s requirements could result in fines of up to 35 million euros (about $36 million) or 7% of annual global turnover for the previous year, whichever is higher, Roblox said in its report.

Meta, Adobe, Airbnb, Lyft, Mastercard, Gartner, and Roblox didn’t return requests for additional comment on their 10-Ks.

Future Disclosure

AI risk-flagging is expected to create a domino effect as companies learn more about the EU law.

“Companies read one another’s 10-Ks, so there’s always a bit of hawkishness,” said Jones, of the International Association of Privacy Professionals, adding organizations may find it important to get on the same page.

Businesses need strong risk management systems and employees who know the entire lifecycle of AI development, said Don Pagach, director of research for the Enterprise Risk Management Initiative at North Carolina State University.

This year’s disclosures might prompt further concerns from the public about companies’ AI governance, Jones said.

“It will drive investor questioning around, ‘Well, what’s your response to the AI Act? Who is accountable in the organization?’” he said.

Ultimately, people should care about the EU law because of the bloc’s market power, Botero said. The law applies not only to companies considering expanding their reach to the EU in the future, but also to those who already have EU clients, she said.

“If you’re looking at companies that want to build AI systems, you can’t ignore one of the largest markets in the world,” she said.

To contact the reporter on this story:

To contact the editors responsible for this story:

Learn more about Bloomberg Law or Log In to keep reading:

See Breaking News in Context

Bloomberg Law provides trusted coverage of current events enhanced with legal analysis.

Already a subscriber?

Log in to keep reading or access research tools and resources.